|

Nasim Souly

I received my PhD in Computer Science from University Of Central Florida Center for Research in Computer Vision (CRCV)

under supervision of Dr. Mubarak Shah .

My main research area is computer vision and machine learning specially saliency detection and semantic segmentation.

Before that, I had graduated in Master degree of Artificial Intelligence in Amirkabir University of Science and Technology in Tehran - Iran.

Email: nsouly@eecs.ucf.edu |

Projects:

|

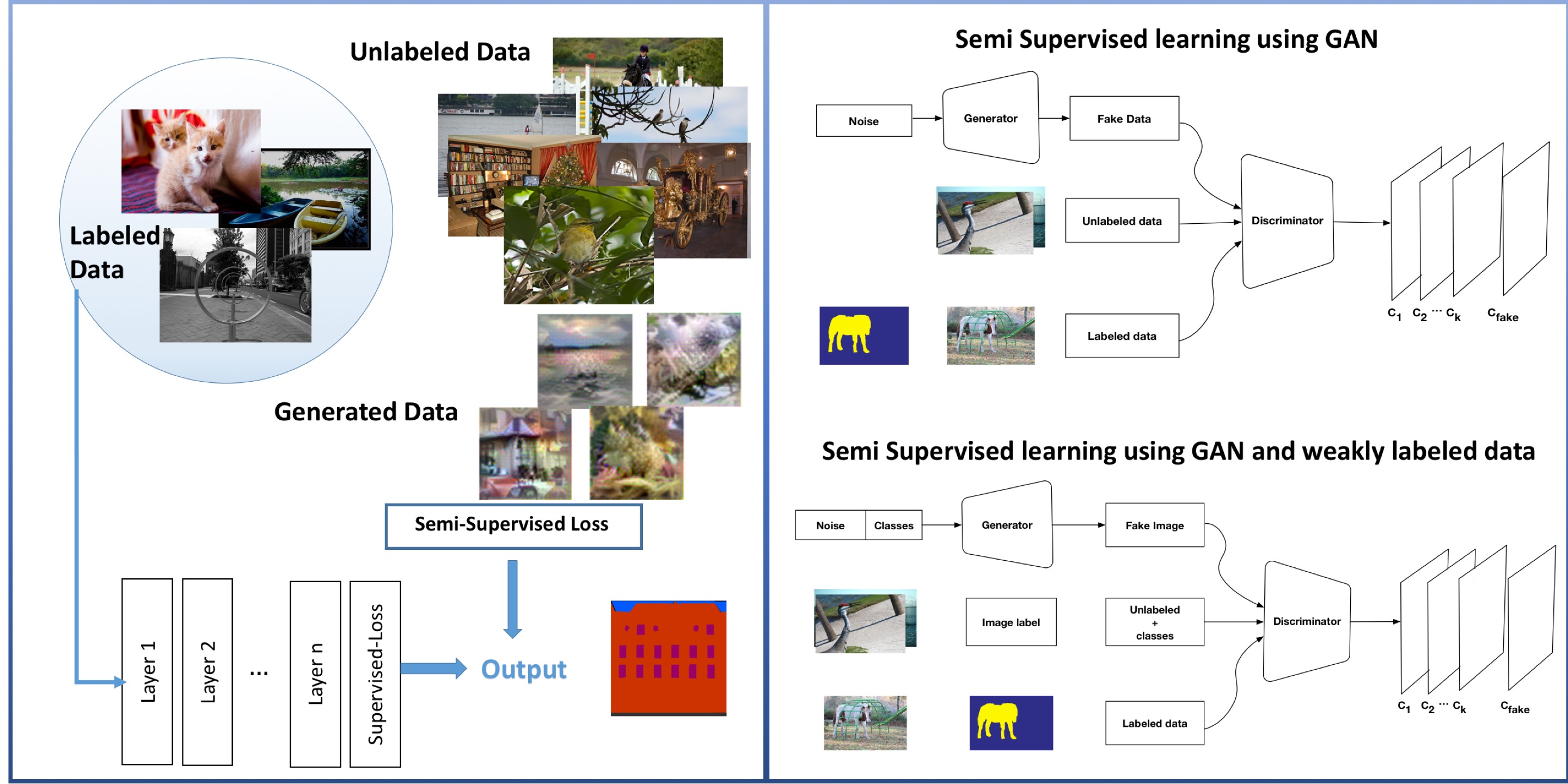

Semi Supervised Semantic Segmentation Using Generative Adversarial Network Abstract: Semantic segmentation has been a long standing challenging task in computer vision. It aims at assigning a label to each image pixel and needs significant number of pixellevel annotated data, which is often unavailable. To address this lack, in this paper, we leverage, on one hand, massive amount of available unlabeled or weakly labeled data, and on the other hand, non-real images created through Generative Adversarial Networks. In particular, we propose a semi-supervised framework – based on Generative Adversarial Networks (GANs) – which consists of a generator network to provide extra training examples to a multi-class classifier, acting as discriminator in the GAN framework, that assigns sample a label y from the K possible classes or marks it as a fake sample (extra class). The underlying idea is that adding large fake visual data forces real samples to be close in the feature space, enabling a bottom-up clustering process, which, in turn, improves multiclass pixel classification. To ensure higher quality of generated images for GANs with consequent improved pixel classification, we extend the above framework by adding weakly annotated data, i.e., we provide class level information to the generator. We tested our approaches on several challenging benchmarking visual datasets, i.e. PASCAL, SiftFLow, Stanford and CamVid, achieving competitive performance also compared to state-of-the-art semantic segmentation methods. [ Paper (Published in ICCV17)] [ Presentation file] |

|

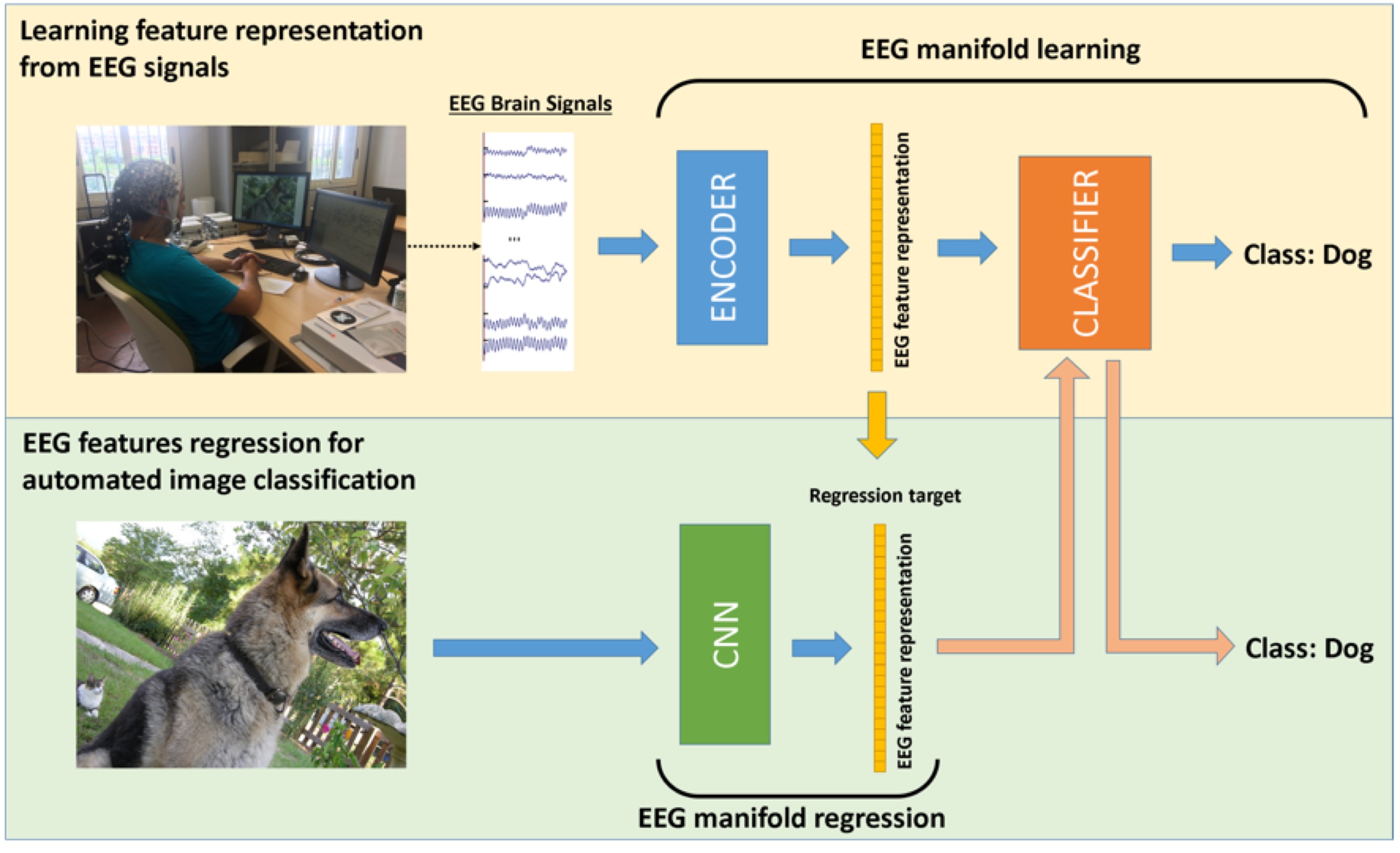

Deep Learning Human Mind for Automated Visual Classification This is a joint work with University of Catania which has been accepted as an oral presenattion at CVPR 2017. Abstract: What if we could effectively read the mind and transfer human visual capabilities to computer vision methods? In this paper, we aim at addressing this question by developing the first visual object classifier driven by human brain signals. In particular, we employ EEG data evoked by visual object stimuli combined with Recurrent Neural Networks (RNN) to learn a discriminative brain activity manifold of visual categories. Afterward, we train a Convolutional Neural Network (CNN)–based regressor to project images onto the learned manifold, thus effectively allowing machines to employ human brain–based features for automated visual classification. We use a 32-channel EEG to record brain activity of seven subjects while looking at images of 40 ImageNet object classes. The proposed RNN-based approach for discriminating object classes using brain signals reaches an average accuracy of about 40%, which outperforms existing methods attempting to learn EEG visual object representations. As for automated object categorization, our human brain–driven approach obtains competitive performance, comparable to those achieved by powerful CNN models, both on ImageNet and CalTech 101, thus demonstrating its classification and generalization capabilities. This gives us a real hope that, indeed, human mind can be read and transferred to machines. [ Paper] |

|

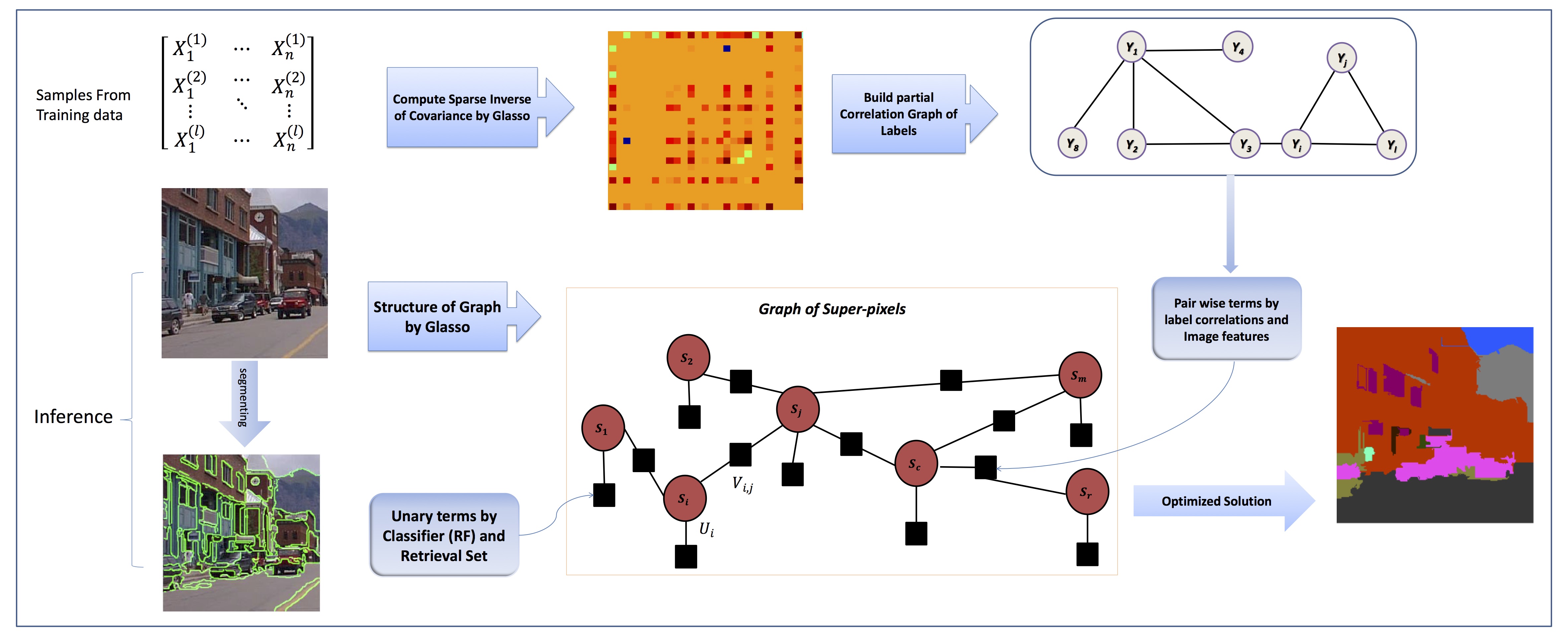

Scene Labeling Using Sparse Precision Matrix Abstract: Scene labeling task is to segment the image into meaningful regions and categorize them into classes of objects which comprised the image. Commonly used methods typically find the local features for each segment and label them using classifiers. Afterwards, labeling is smoothed in order to make sure that neighboring regions receive similar labels. However, these methods ignore expressive connections between labels and non-local dependencies among regions. In this paper, we propose to use a sparse estimation of precision matrix (also called concentration matrix), which is the inverse of covariance matrix of data obtained by graphical lasso to find interaction between labels and regions. To do this, we formulate the problem as an energy minimization over a graph, whose structure is captured by applying sparse constraint on the elements of the precision matrix. This graph encodes (or represents) only significant interactions and avoids a fully connected graph, which is typically used to reflect the long distance associations. We use local and global information to achieve better labeling. We assess our approach on three datasets and obtained promising results. [CVPR 2016 Paper] [Video Presentation] [ Presentation file] [ Code] [ Github] |

|

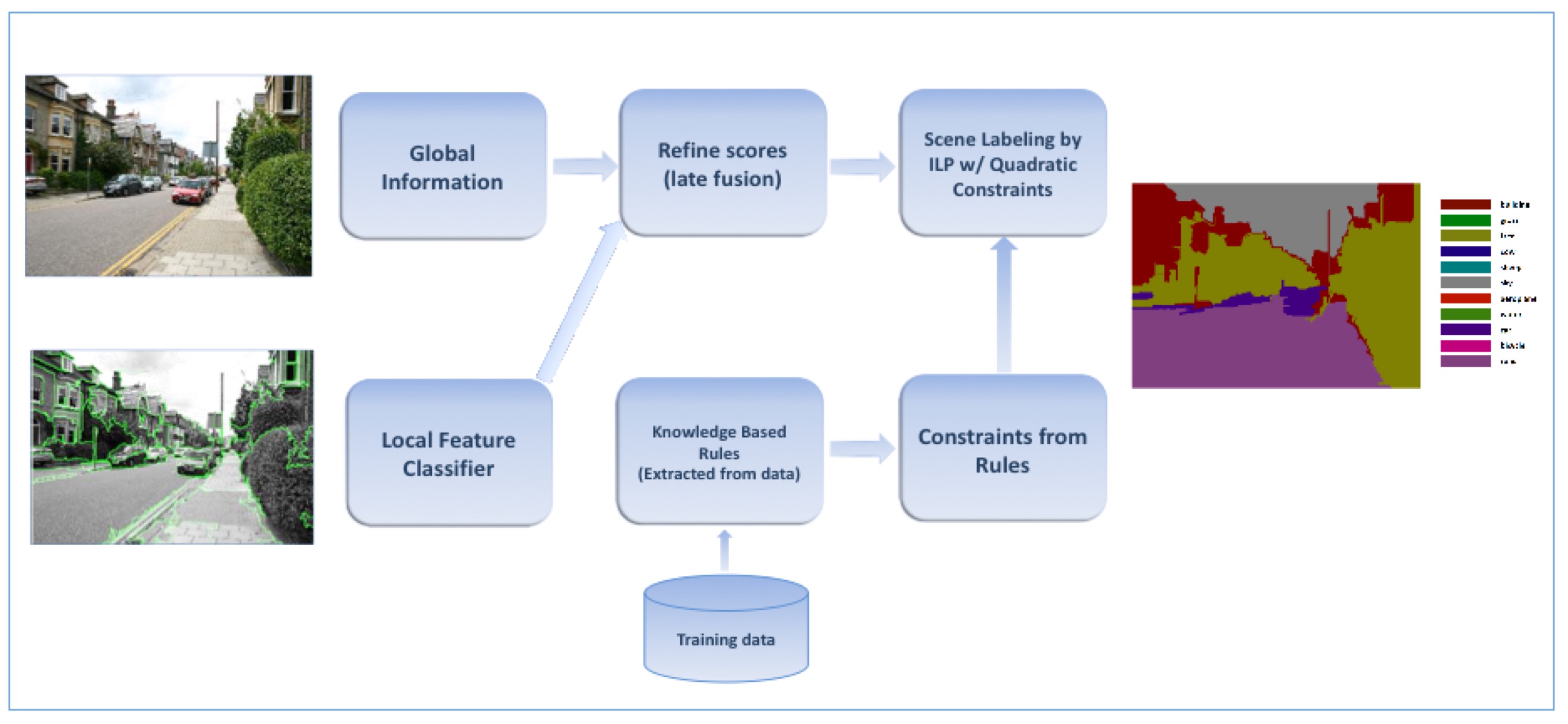

Scene Labeling Through Knowledge-Based Rules Employing Constrained Integer Linear Programing Abstract: Scene labeling entails segmentation of an image into meaningful regions or superpixels and their categorization into classes of objects composing the image. Commonly used methods typically find the local features for each segment and label them using classifiers. Afterward, labeling is smoothed locally in order to make sure that neighboring regions receive similar labels. However, these methods ignore expressive and non-local dependencies among regions as they require expensive training and inference. In this paper, we propose to use high level knowledge regarding rules- such as presence, implication and mutual exclusivity- in the inference to incorporate dependencies among regions in the image to improve scores of scene labeling. Towards this aim, we extract knowledge-based rules from training data and transform them into constraints in Integer Programming to optimize the structured problem of assigning labels to super-pixels (consequently pixels) in an image. In addition, we propose to use softconstraints, which permit violation of hard constraints by imposing penalties, and can be solved through slack variables; thereby yielding a more flexible model. Furthermore, we learn scene-label association weights in order to employ global context to improve label confidences. In doing so, we use a deep neural network trained to obtain scene categories in an image, and we learn associations of image-level information with labels of super-pixel through a non-negative sparse regression model. We assess our approach on three datasets and obtain promising results. [ Paper] |

|

Visual Saliency Detection Using Group Lasso Regularization in Videos of Natural Scenes Abstract: Visual saliency is the ability of a vision system to promptly select the most relevant data in the scene and reduce the amount of visual data that needs to be processed. Thus, its applications for complex tasks such as object detection, object recognition and video compression have attained interest in computer vision studies. In this paper,we introduce a novel unsupervised method for detecting visual saliency in videos of natural scenes. For this, we divide a video into non-overlapping cuboids and create a matrix whose columns correspond to intensity values of these cuboids. Simultaneously, we segment the video using a hierarchical segmentation method and obtain super-voxels. A dictionary learned from the feature data matrix of the video is subsequently used to represent the video as coefficients of atoms. Then, these coefficients are decomposed into salient and nonsalient parts. We propose to use group lasso regularization to find the sparse representation of a video, which benefits from grouping information provided by super-voxels and extracted features from the cuboids.We find saliency regions by decomposing the feature matrix of a video into low-rank and sparse matrices by using robust principal component analysis matrix recovery method. The applicability of our method is tested on four video data sets of natural scenes. Our experiments provide promising results in terms of predicting eye movement using standard evaluation methods. In addition, we show our video saliency can be used to improve the performance of human action recognition on a standard dataset. [Project Page] [Related Publication ] |

|

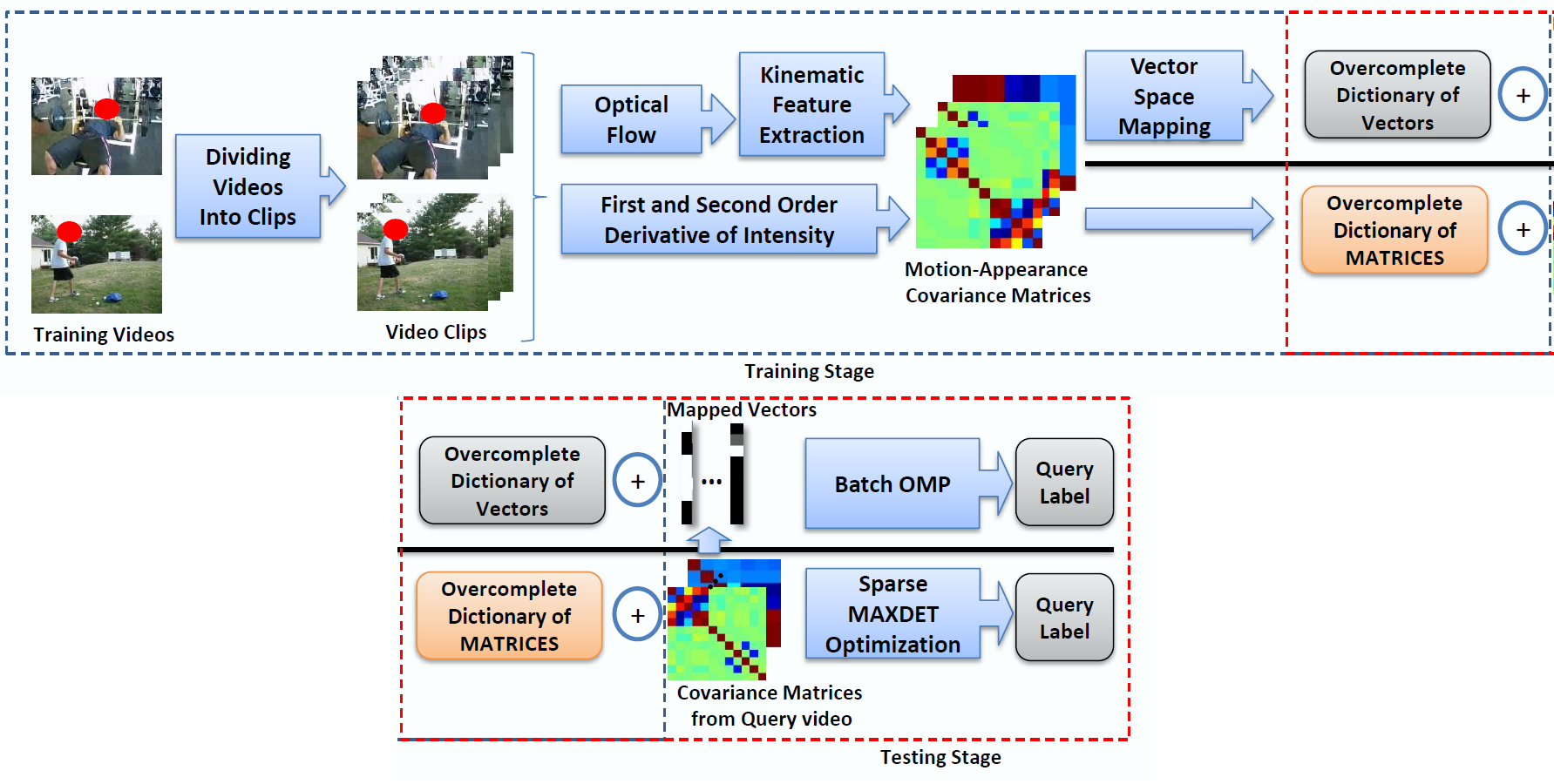

Covariance of Motion and Appearance Features for Human Action and Gesture Recognition Abstract:

In this work, we introduce a novel descriptor for general purpose video analysis. In our approach, we

compute kinematic features from optical flow and first and second-order derivatives of intensities to represent motion and appearance

respectively. These features are then used to construct covariance matrices which capture joint statistics of both low-level motion

and appearance features extracted from a video. Using an over-complete dictionary of the covariance based descriptors built from

labeled training samples, we formulate low-level event recognition as a sparse linear approximation problem. Within this, we pose the

sparse decomposition of a covariance matrix, which also conforms to the space of semi-positive definite matrices, as a determinant

maximization problem. Also since covariance matrices lie on non-linear Riemannian manifolds, we compare our former approach with

a sparse linear approximation alternative that is suitable for equivalent vector spaces of covariance matrices. This is done by searching

for the best projection of the query data on a dictionary using an Orthogonal Matching pursuit algorithm.

We show the applicability of our video descriptor in two different application domains - namely low-level event recognition in

unconstrained scenarios and gesture recognition using one shot learning. Our experiments provide promising insights in large scale

video analysis.

[Arxiv]

Contribution: UCF is a part of SRI-Sarnoff team and this work was part of ALADDIN projects. |